This is an old revision of the document!

Meta-information about environment maps

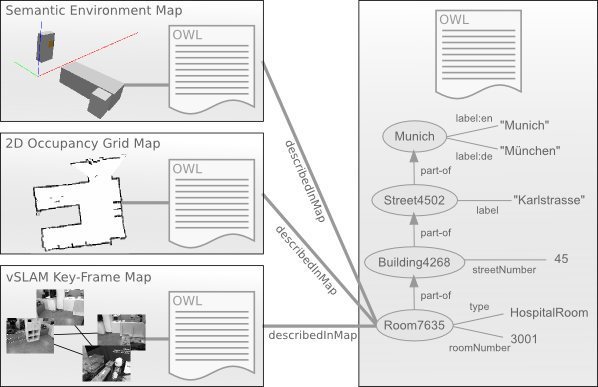

In particular in the context of the RoboEarth project, which deals with the exchange of knowledge between robots, robots face the problem how to represent which environment is described in a map in such a way that robots can query for a map and retrieve the right one? The following approach has been chosen in the RoboEarth context:

- Hierarchical, symbolic description similar to human-readable addresses: Country, city, street, building, floor, room (described by room number or room type)

- These upper-level categories are described as instances (e.g. Munich as instance of City) which are linked by the transitive properPhysicalParts-relation

- This allows to query based on a conjunction of class restrictions, e.g. for a room 3006 in a building in Karlstrasse in Munich.

There can be multiple maps describing the same environment in different ways, for example a 2D occupancy grid, used e.g. for laser-based localization, or a semantic environment map, consisting of object instances and their properties. These can all be assigned to the same room/floor/building, and the downloading robot can decide which one to use based on its properties and requirements.

Objects in the map

The objects in the map are represented using the general spatio-temporal object pose representation used in KnowRob. This allows to incorporate more current information about the objects, e.g. updated poses, transparently by just storing the novel detections.

Articulation information is stored as a property of the respective objects. The relation between an object's parts (e.g. the frame of a cupboard and its door) is explicitly described using OWL properties, and articulation properties like joint limits are attached to the hinge. The representation is compatible to the description of joints in the ROS articulation stack.

Rotational joints

The general structure for rotational joints is the following:

- cupboard1 – SemanticMapPerception10 – PoseMatrix10

- type Cupboard

- properPhysicalParts hinge1

- properPhysicalParts door1

- properPhysicalParts handle1

- hinge1 – TouchPerception11 – PoseMatrix11

- type RotationalJoint

- minJointValue 0.1

- maxJointValue 0.9

- connectedTo-Rigidly cupboard1

- connectedTo-Rigidly door1

- radius 0.3

- door1 – SemanticMapPerception12 – PoseMatrix12

- type Door

- properPhysicalParts handle1

The rotation happens around the z-axis of the joint, described by the pose matrix observed by TouchPerception11 (the tactile estimation of the joint parameters).

Prismatic joints

Prismatic joints are described in a similar way, though the parameters are obviously slightly different from rotational joints:

- cupboard2 – SemanticMapPerception20 – PoseMatrix20

- type Cupboard

- properPhysicalParts slider2

- properPhysicalParts drawer2

- properPhysicalParts handle2

- slider2 – TouchPerception21 – PoseMatrix21

- type PrismaticJoint

- minJointValue 0.0

- maxJointValue 0.3

- connectedTo-Rigidly cupboard2

- connectedTo-Rigidly drawer2

- direction dirvec2

- drawer2 – SemanticMapPerception22 – PoseMatrix22

- type Drawer

- properPhysicalParts handle2

- dirvec2

- type Vector

- vectorX 1

- vectorY 0

- vectorZ 0

The direction of articulation of prismatic joints is explicitly described by a direction vector.

Example queries

Query for a map that belongs a room #3001 in Munich:

?- rdf_has(M, rdfs:label, literal(like('*Munich*'), _)),

owl_has(M, knowrob:properPhysicalParts, R),

owl_has(R, knowrob:roomNumber, literal(type(_, '3001'))),

owl_has(R, knowrob:describedInMap, Map).

M = 'http://ias.cs.tum.edu/kb/ias_hospital_room.owl#Munich',

R = 'http://ias.cs.tum.edu/kb/ias_hospital_room.owl#Room7635',

Map = 'http://ias.cs.tum.edu/kb/ias_hospital_room.owl#SemanticEnvironmentMap7635'