Google Summer of Code 2014

The Institute for Artificial Intelligence at the University of Bremen, the maintainer of the KnowRob system, has been selected as mentoring organization for the Google Summer of Code 2014. The initiative, started in 2005, sponsors students during the summer months for contributing code to selected open source projects.

One of the proposed projects is about extending the methods for segmenting and classifying CAD models of objects (see previous blog post). You can find more information about the proposed projects as well as contact information of the designated mentors at our Google Summer of Code page.

Decomposing CAD Models into their Functional Parts

Today’s robots are still lacking comprehensive knowledge bases about objects and their properties. Yet, a lot of knowledge is required when performing manipulation tasks to identify abstract concepts like a “handle” or the “blade of a spatula” and to ground them into concrete coordinate frames that can be used to parametrize the robot’s actions.

In a recent paper, we presented a system that enables robots to use CAD models of objects as a knowledge source and to perform logical inference about object components that have automatically been identified in these models. The system includes several algorithms for mesh segmentation and geometric primitive fitting which are integrated into the robot’s knowledge base as procedural attachments to the semantic representation. Bottom-up segmentation methods are complemented by top-down, knowledge-based analysis of the identified components. The evaluation on a diverse set of object models, downloaded from the Internet, shows that the algorithms are able to reliably detect several kinds of object parts.

Aligning Specifications of Everyday Manipulation Tasks

Recently, there has been growing interest in enabling robots to use task instructions from the Internet and to share tasks they have learned with each other. To competently use, select and combine such instructions, robots need to be able to find out if different instructions describe the same task, which parts of them are similar and which ones differ.

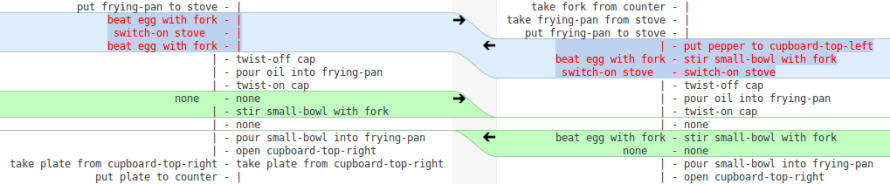

In a recent paper, we have investigated techniques for automatically aligning symbolic task descriptions. We propose to adapt and extend established algorithms for sequence alignment that are commonly used in bioinformatics in order to make them applicable to robot action specifications. The extensions include methods for the comparison of complex sequence elements, for taking the semantic similarity of actions into account, and for aligning descriptions at different levels of granularity. We evaluate the algorithm on two large datasets of observations of human everyday tasks and show that they are able to align action sequences performed by different subjects in very different ways.

The code for this work is available online from the KnowRob account at GitHub.

KnowRob has moved to GitHub

The KnowRob code has moved to its own repository at GitHub as part of an effort to make the development more open to the community. Several packages that have previously been part of the tum-ros-pkg repository or other private repositories have been made available in the knowrob_addons and knowrob_human repositories.

Migration

If you use the KnowRob binary packages, you do not have to change anything. If you use the source installation, check out the new repository using

git clone git@github.com:knowrob/knowrob.git

Mailing list

If you would like to stay up to date with recent developments of KnowRob, please subscribe to the knowrob-users mailing list that is used for important announcements around the KnowRob system.

KnowRob article in IJRR

An extensive article about the KnowRob system and the design decisions that lead to the current architecture has been published in the International Journal of Robotics Research (IJRR). The article is currently the most coherent and up-to-date description of the system and the concepts behind KnowRob.