Aligning Specifications of Everyday Manipulation Tasks

Recently, there has been growing interest in enabling robots to use task instructions from the Internet and to share tasks they have learned with each other. To competently use, select and combine such instructions, robots need to be able to find out if different instructions describe the same task, which parts of them are similar and which ones differ.

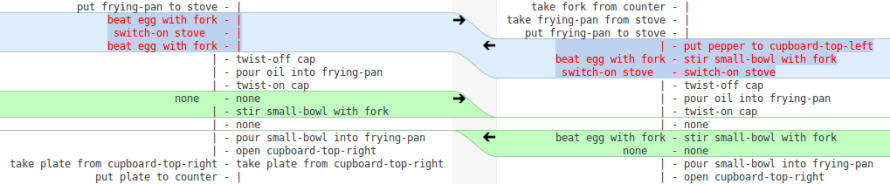

In a recent paper, we have investigated techniques for automatically aligning symbolic task descriptions. We propose to adapt and extend established algorithms for sequence alignment that are commonly used in bioinformatics in order to make them applicable to robot action specifications. The extensions include methods for the comparison of complex sequence elements, for taking the semantic similarity of actions into account, and for aligning descriptions at different levels of granularity. We evaluate the algorithm on two large datasets of observations of human everyday tasks and show that they are able to align action sequences performed by different subjects in very different ways.

The code for this work is available online from the KnowRob account at GitHub.