Write an interface to your perception system

| This page describes the 'catkinized' version of KnowRob that uses the catkin buildsystem and the pure Java-based rosjava. The documentation for the older version, which was based on the rosbuild buildsystem and rosjava_jni, can be found here. |

|---|

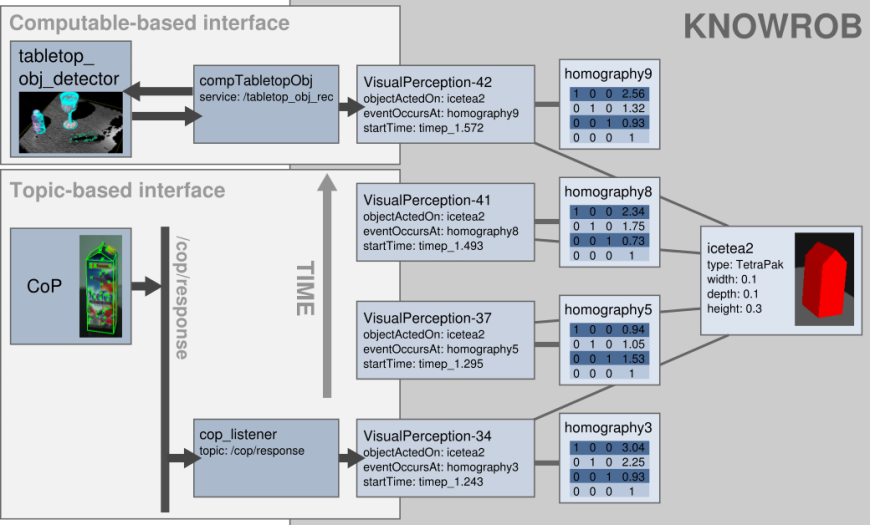

There are two main approaches how perception can be performed: Some perception algorithms continuously detect objects and output the results (in ROS terminology: publish the results on a topic), others perform recognition only on demand (in ROS: by calling a service). These two kinds of systems need to be interfaced in different ways: The former requires a topic listener that records the published object detections and adds them to the knowledge base, the latter can be interfaced by computables that trigger the perception procedure when a query involves the respective information.

In this tutorial, we explain on two minimal examples how to write interfaces to these two kinds of perception systems. Currently, there is no 'standard' perception system in ROS, so some manual work is still needed to interface your favourite object recognition with KnowRob. We therefore created two 'dummy' perception systems that output simulated random object detections. It should however be very easy to adapt the examples to any real perception system.

Before starting with the tutorial, it is important to first understand how object detections are represented in KnowRob. Further information on this topic can be found in Sections 3.2 and 6.1 in http://nbn-resolving.de/urn/resolver.pl?urn:nbn:de:bvb:91-diss-20111125-1079930-1-7.

Setting up the perception tutorial

The knowrob_perception tutorial is part of the knowrob_tutorials repository. You need to check it out into your catkin workspace and compile it using catkin_make:

cd /path/to/catkin_ws/src git clone https://github.com/knowrob/knowrob_tutorials.git cd /path/to/catkin_ws catkin_make

Since a large part of the tutorial is written in Java, it is recommended to set up an Eclipse project and to inspect and edit the Java files from there. You can generate the Eclipse project files using the following command and then only need to create a new “Java Project” for that directory inside Eclipse. The classpath will then already be set up to include all dependencies of the knowrob_perception_tutorial package.

./gradlew eclipse

Interfacing topic-based perception systems

Publisher

The file src/edu/tum/cs/ias/knowrob/tutorial/DummyPublisher.java implements a simple publisher of object detections. It simulates a perception system that regularly detects objects and publishes these detections on the /dummy_object_detections topic once a second. Try to understand how these detections are generated by looking at the generateDummyObjectDetection() method. You can start the publisher using

rosrun knowrob_perception_tutorial dummy_publisher

Once the publisher is running, you can have a look at the generated object poses by calling the following command from a different terminal.

rostopic echo /dummy_object_detections

It should output messages of the following form:

type: DinnerFork

pose:

header:

seq: 0

stamp:

secs: 1357547989

nsecs: 196672575

frame_id: map

pose:

position:

x: 0.300724629488

y: 2.96134330258

z: 1.56672560148

orientation:

x: 0.0

y: 0.0

z: 0.0

w: 1.0

Subscriber

The counterpart on the client side that consumes the object detections is implemented in the file src/edu/tum/cs/ias/knowrob/tutorial/DummySubscriber.java. The subscriber uses two threads: The main thread subscribes to the topic and puts the incoming messages into the detections queue. The updateKnowRobObjDetections thread processes all object detections in this queue and creates the corresponding representations in KnowRob. The rationale behind this setup is to keep the subscriber thread as light-weight as possible to avoid problems of missing messages when the program is occupied updating the knowledge base. While, in this case, the creation of an object detection in KnowRob is quite fast, this two-thread structure is crucial once the processing becomes more complex.

The following code snippet is the main part of the updateKnowRobObjDetections thread. It pops an ObjectDetection message from the detections queue, converts the pose quaternion into a 4×4 pose matrix and calls the create_object_perception predicate.

node.executeCancellableLoop(new CancellableLoop() { @Override protected void loop() throws InterruptedException { ObjectDetection obj = detections.take(); Matrix4d p = quaternionToMatrix(obj.getPose().getPose()); String q = "create_object_perception(" + "'http://knowrob.org/kb/knowrob.owl#"+obj.getType()+"', [" + p.m00 + ","+ p.m01 + ","+ p.m02 + ","+ p.m03 + "," + p.m10 + ","+ p.m11 + ","+ p.m12 + ","+ p.m13 + "," + p.m20 + ","+ p.m21 + ","+ p.m22 + ","+ p.m23 + "," + p.m30 + ","+ p.m31 + ","+ p.m32 + ","+ p.m33 + "], ['DummyObjectDetection'], ObjInst)"; PrologInterface.executeQuery(q); } });

This predicate from the knowrob_perception package is defined as below. It creates a new object instance for the given object type (rdf_instance_from_class), creates an instance describing the perception event using the PerceptionTypes given as argument, links the object instance to this perception event, and sets the pose at which the object was detected. This convenience predicate assumes that the detected objects are always novel. By calling the different predicates individually, one can also add perception events to existing objects instances if the identity of the object instance is known.

create_object_perception(ObjClass, ObjPose, PerceptionTypes, ObjInst) :- rdf_instance_from_class(ObjClass, ObjInst), create_perception_instance(PerceptionTypes, Perception), set_object_perception(ObjInst, Perception), set_perception_pose(Perception, ObjPose).

KnowRob integration

Whenever the subscriber is started, it creates the KnowRob-internal representations for all object detections that are received via the topic. It can be run from Prolog via the JPL Java-Prolog interface as defined in prolog/perception_tutorial.pl:

obj_detections_listener(Listener) :- jpl_new('org.knowrob.tutorials.DummySubscriber', [], Listener), jpl_list_to_array(['org.knowrob.tutorials.DummySubscriber'], Arr), jpl_call('org.knowrob.utils.ros.RosUtilities', runRosjavaNode, [Listener, Arr], _).

To start a rosjava node from KnowRob, you first need to instantiate the class containing the node definition, create an array with the fully-qualified class name, and pass it to the runRosjavaNode method. After starting the dummy publisher in another terminal, you can start KnowRob, create the topic listener, and query for object instances and their poses.

?- obj_detections_listener(L). L = @'J#00000000000034668496' % ... several rosjava INFO messages ... % wait for a few seconds... ?- owl_individual_of(A, knowrob:'HumanScaleObject'). A = knowrob:'Cup_uGmuwKPo' ; A = knowrob:'DrinkingBottle_TaVWzXre' ; A = knowrob:'DrinkingBottle_rMsqkRjP' ; A = knowrob:'DinnerFork_tDjYwuhx' ?- current_object_pose(knowrob:'DinnerFork_tDjYwuhx', P). P = [1.0,0.0,0.0,2.9473,0.0,1.0,0.0,2.6113,0.0,0.0,1.0,0.2590,0.0,0.0,0.0,1.0].

Interfacing service-based perception systems

Perception service

The dummy perception service is very similar to the dummy publisher. Whenever a request for an object detection is received, it responds with a simulated detection of a random object type at a random pose. In the example, the 'request' part of the service is empty – in real scenarios, the request will often specify properties of the perception method to be used. The code of the dummy service can be found in the file src/edu/tum/cs/ias/knowrob/tutorial/DummyService.java. It can be started with the following command:

rosrun knowrob_perception_tutorial dummy_service

Service client

The callObjDetectionService() method in the service client simply calls the dummy ROS service and returns the ObjectDetection message returned by the service call. The code can be found in the file src/edu/tum/cs/ias/knowrob/tutorial/DummyClient.java.

KnowRob integration

While the Java side of the service client is much simpler than the topic listener, the integration with KnowRob is a bit more complex. The reason is that the service call needs to be actively triggered, while the topic listener just runs in the background in a separate thread. This means that the inference needs to be aware of the possibility of acquiring information about object detections by calling this service. Such functionality can be realized using computables that describe for an OWL class or property how individuals of this class or values of this property can be computed. To better understand the following steps, it is recommended to have completed the tutorial on defining computables.

In a first step, we need to implement a Prolog predicate that performs the service call, processes the returned information, adds it to the knowledge base, and returns the identifiers of the detected object instances. This predicate is implemented in prolog/perception_tutorial.pl. In contrast to topic-based example above, which performed most processing in Java, this example shows how more of the processing can be done in Prolog:

comp_object_detection(ObjInst, _ObjClass) :- % Call the DetectObject service for retrieving a new object detection. % The method returns a reference to the Java ObjectDetection message object jpl_new('org.knowrob.tutorials.DummyClient', [], Client), jpl_list_to_array(['org.knowrob.tutorials.DummyClient'], Arr), jpl_call('org.knowrob.utils.ros.RosUtilities', runRosjavaNode, [Client, Arr], _), jpl_call(Client, 'callObjDetectionService', [], ObjectDetection), % Read type -> simple string; combine with KnowRob namespace jpl_call(ObjectDetection, 'getType', [], T), atom_concat('http://knowrob.org/kb/knowrob.owl#', T, Type), % Convert the pose into a rotation matrix jpl_call(ObjectDetection, 'getPose', [], PoseMatrix), knowrob_coordinates:matrix4d_to_list(PoseMatrix,PoseList), % Create the object representations in the knowledge base % The third argument is the type of object perception describing % the method how the object has been detected create_object_perception(Type, PoseList, ['DummyObjectDetection'], ObjInst).

The predicate first calls the static callObjDetectionService method in the DummyClient class and receives an ObjectDetection object as result. It then reads its member variables (type, pose) and converts them from a quaternion into a pose matrix and into a Prolog list as row-based matrix representation. In the end, it calls the same create_object_perception predicate that was also used in the previous example. This predicate can be used to manually query the perception service from Prolog (assuming the perception service is running in another terminal):

rosrun rosprolog rosprolog knowrob_perception_tutorial ?- comp_object_detection(Obj, _). Obj = 'http://ias.cs.tum.edu/kb/knowrob.owl#Cup_vUXiHMJy'.

Such a manual query however requires the user to know of the existence of this service. It also requires adaptation of the query whenever the context changes, e.g. when different or multiple recognition systems are used. We can avoid these problems by wrapping the predicate into a computable. With this definition, the predicate will automatically be called whenever the user asks for an object pose as long as the service interface is available. The following OWL code defines a computable Prolog class for the example predicate:

<computable:PrologClass rdf:about="#computeObjectDetections"> <computable:command rdf:datatype="&xsd;string">comp_object_detection</computable:command> <computable:cache rdf:datatype="&xsd;string">cache</computable:cache> <computable:visible rdf:datatype="&xsd;string">unvisible</computable:visible> <computable:target rdf:resource="&knowrob;HumanScaleObject"/> </computable:PrologClass>

Instead of calling the service directly, we can now query for object poses and obtain the poses generated by our service in addition to other object poses that have already been in the knowledge base, e.g. in a semantic map:

?- rdfs_instance_of(A, knowrob:'HumanScaleObject'). A = knowrob:'TableKnife_vUXiHMJy'.

Other kinds of perception systems

In this tutorial, we have concentrated on object recognition as a special case of perception. There are of course other perception tasks like the identification and pose estimation of humans, recognition and interpretation of spoken commands, etc. Most of these systems can however be interfaced in a very similar way: If they produce information continuously and asynchronously, a topic-based interface can be used. If they compute information on demand, the computable-based interface can be adapted.

Adapting the examples to your system

To keep the examples as simple and self-contained as possible, we have defined our own dummy components and messages. Your perception system will probably use slightly different messages and may provide more or less information. In this case, you will need to adapt the service client or topic listener to correctly extract information from your messages. After creating the object instance with create_object_perception, you can use rdf_assert to add further properties to the object (e.g. color, weight, etc).