Reasoning about objects

| This page describes the 'catkinized' version of KnowRob that uses the catkin buildsystem and the pure Java-based rosjava. The documentation for the older version, which was based on the rosbuild buildsystem and rosjava_jni, can be found here. |

|---|

Before starting this tutorial, you should have completed the following ones:

Load an environment specification

We have separated the semantic environment map and the set of (smaller) objects inside the environment, such as objects inside the cupboards and drawers. This is commonly a good practice since it allows to use the same (often mostly static) map in different settings. In real tasks, the set of objects is usually detected by the robot during operation and updated over time, but for testing purposes, we load some objects from a file.

With the following commands, you can launch KnowRob including the semantic map of our laboratory kitchen, and then load the OWL file containing a set of demo objects. You need to have the knowrob_tutorials repository in your catkin workspace.

?- rosrun rosprolog rosprolog knowrob_basics_tutorial ?- owl_parse('package://knowrob_map_data/owl/ccrl2_semantic_map.owl'). ?- owl_parse('package://knowrob_basics_tutorial/owl/ccrl2_map_objects.owl').

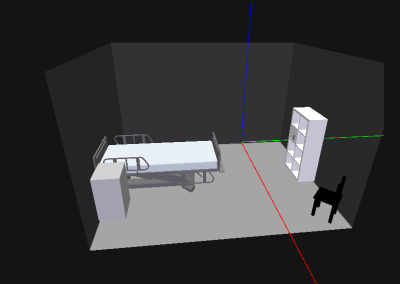

Visualize the environment, including CAD models of objects

Once the map and the example objects have been loaded, you can start the visualization canvas, open the web-based visualization in your browser, and add the objects to this 3D canvas. If definitions for CAD models of objects are loaded, these models are automatically used for visualizing object instances. Otherwise, the system uses grey boxes as default visualization models.

Please make sure that you have launched the 'rosbridge' server in another terminal using:

roslaunch rosbridge_server rosbridge_websocket.launch

You can then send the following commands from your KnowRob shell:

?- visualisation_server. % Ignore the INFO output on the console % Open your browser at http://localhost:1111 ?- owl_individual_of(Map, knowrob:'SemanticEnvironmentMap'), marker_update(object(Map)).

Similarly, we can also visualize small objects in the kitchen:

(owl_individual_of(A, knowrob:'FoodUtensil'); owl_individual_of(A, knowrob:'FoodVessel'); owl_individual_of(A, knowrob:'FoodOrDrink')), marker_update(object(A)).

Query for objects with certain properties

Since all objects in the map are instances of the respective object classes, one can query for objects that have certain properties or belong to a certain class, for example:

% perishable objects: ?- owl_individual_of(A, knowrob:'Perishable'). A = map_obj:'butter1' ; A = map_obj:'buttermilk1' ; A = map_obj:'cheese1' ; A = map_obj:'milk1' ; A = map_obj:'yogurt1' ; A = map_obj:'sausage1' % all HandTools (e.g. silverware) ?- owl_individual_of(A, knowrob:'HandTool'). A = map_obj:'knife1' ; A = map_obj:'fork1' % all FoodVessels (i.e. pieces of tableware) ?- owl_individual_of(A, knowrob:'FoodVessel'). A = map_obj:'cup1' ; A = map_obj:'plate1' ; A = map_obj:'saucer1' % everything with a handle: ?- owl_has(A, knowrob:properPhysicalParts, H), owl_individual_of(H, knowrob:'Handle'). A = map_obj:'Dishwasher37', H = map_obj:'Handle145' [...]

Querying for qualitative spatial relations

Using computables that calculate qualitative spatial relations between objects, we can query e.g. in which container we expect to find sausage1, ask for the content of Refrigerator67, or ask what is on top of Dishwasher37:

?- rdf_triple(knowrob:'in-ContGeneric', map_obj:sausage1, C). C = knowrob:'Refrigerator67' ?- rdf_triple(knowrob:'in-ContGeneric', O, knowrob:'Refrigerator67'). O = map_obj:'cheese1' ; O = map_obj:'milk1' ; O = map_obj:'sausage1' ?- rdf_triple(knowrob:'on-Physical', A, knowrob:'Dishwasher37'). A = map_obj:'cup1' ; A = knowrob:'CounterTop205'

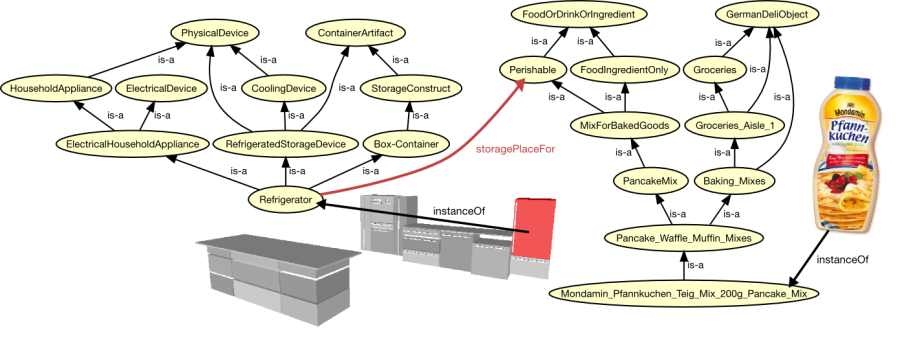

Inferring likely storage locations

For some tasks, robots need to reason about the nominal locations of objects, for example when cleaning up or when unpacking a shopping basket. There are different techniques for inferring the location where an object should be placed.

- Using assertions of the storagePlaceFor property is a rather generic, though not very adaptive technique that allows to state e.g. that perishable items belong into the refrigerator. It does not require any knowledge about the environment, but since it works on the level of object classes, it cannot choose between containers of the same type, e.g. different cupboards.

- A finer-grained solution is based on organizational principles that places objects at the location where semantically similar objects are stored. It requires some (partial) knowledge about the distribution of other objects in the environment.

Querying for likely storage locations

The simple option based on the storagePlaceFor predicate can be queried as follows in order to determine where an object (instance or class) shall be stored, or which known objects are to be stored in a given container:

?- storagePlaceFor(Place, map_obj:'butter1'). Place = knowrob:'Refrigerator67' ?- storagePlaceFor(knowrob:'Refrigerator67', Obj). Obj = map_obj:'butter1' ; Obj = map_obj:'buttermilk1' ; Obj = map_obj:'cheese1' ; Obj = map_obj:'milk1'; [...]

An extension of the storagePlaceFor predicate also reads why the system came to the conclusion that something is to be stored at some place:

?- storagePlaceForBecause(Place, map_obj:'butter1', Because). Place = knowrob:'Refrigerator67', Because = knowrob:'Perishable'

TODO: add orgprinciples calls

Checking for objects that are not at their storage location

By combining the semantic map and the current object locations with the knowledge about the locations where objects should be, the robot can determine which objects are misplaced. This may for instance be useful when tidying up an environment. The following code first compute which objects are inside which other ones, then selects those that are food or drink, computes their most likely storage place (which, in this example, is usually the refrigerator), and compares the two places. As a result, the system finds out that the butter and the buttermilk are misplaced in a drawer and in the oven, respectively.

?- rdf_triple(knowrob:'in-ContGeneric', Obj, ActualPlace), owl_individual_of(Obj, knowrob:'FoodOrDrink'), storagePlaceFor(StoragePlace,Obj), ActualPlace\=StoragePlace. Obj = map_obj:'butter1', ActualPlace = knowrob:'Drawer31', StoragePlace = knowrob:'Refrigerator67' ; Obj = map_obj:'buttermilk1', ActualPlace = knowrob:'Oven19', StoragePlace = knowrob:'Refrigerator67'

You will notice that it takes a bit of time to answer the query: This is because the first predicate searches for all objects that are inside another one, and for doing this has to compute all pairwise 'inside' relations between all objects. By restricting the set of objects to be considered as inner and outer objects, this could be sped up a lot. This is one example of a general property of Prolog: The efficiency depends on how the query is formulated.

Reading the object component hierarchy

Composed objects and their parts are linked by a 'parts' hierarchy, described using the properPhysicalParts property in OWL. This property is transitive, i.e. a part of a part of an object is also a part of the object itself. You can read all parts and sub-parts of an object using owl_has, which takes the transitivity into account. In the example below, Handle160 is a part of Door70, which by itself is part of Refrigerator67.

?- owl_has(knowrob:'Refrigerator67', knowrob:properPhysicalParts, P). P = knowrob:'Door70' ; P = knowrob:'Handle160' ; P = knowrob:'Hinge70'

Representation of articulated objects such as cupboards

Articulated objects, e.g. cupboards, that have doors or drawers are represented in a special way to describe, on the one hand, the component hierarchy, and, on the other hand, which connections are fixed and which are movable. Like for other composed objects, there is a part-of hierarchy (properPhysicalParts). Joints are parts of the cupboard/parent object, and are fixed-to (connectedTo-Rigidly) both the parent and the child (e.g. the door). In addition, the child is hingedTo or prismaticallyConnectedTo the parent.

Joints are described using the following properties, which are compatible to the representation used by the [http://www.ros.org/wiki/articulation ROS articulation stack].

- Type: At the moment, rotational and prismatic joints are supported (knowrob:'Hinge' and knowrob:'PrismaticJoint')

- Parent, Child: resp. object instances

- Pose: like for normal objects using e.g. a SemanticMapPerception instance

- Direction: vector giving the opening direction of a prismatic joint

- Radius: radius of a rotational joint (e.g. between handle and hinge)

- Qmin, Qmax: lower and upper joint limits

Reading articulation information

There are some helper predicates for reading, creating, updating and deleting joints from articulated objects. This task is on the one hand rather common, on the other hand somewhat complex because the structure visualized in the previous image needs to be established. To create a joint of type knowrob:'HingedJoint' between two object instances roboearth:'IkeaExpedit2x40' and roboearth:'IkeaExpeditDoor13' at position (1,1,1) with unit orientation, radius 0.33m and joint limits 0.1 and 0.5 respectively, one can use the following statement:

create_joint_information('HingedJoint', roboearth:'IkeaExpedit2x40', roboearth:'IkeaExpeditDoor13', [1,0,0,1,0,1,0,1,0,0,1,1,0,0,0,1], [], '0.33', '0.1', '0.5', Joint).

If a prismatic joint is to be created instead, the empty list [] needs to be replaced with a unit vector describing the joint's direction, e.g. [0,0,1] for a joint opening in z-direction, and the joint type needs to be set as 'PrismaticJoint'. Joint information can conveniently be read using the following predicate that requires a joint instance as argument:

read_joint_information(Joint, Type, Parent, Child, Pose, Direction, Radius, Qmin, Qmax).

To update joint information, one can use the following predicate:

update_joint_information(Joint, 'HingedJoint', [1,0,0,2,0,1,0,2,0,0,1,2,0,0,0,1], [1,2,3], 0.32, 1.2, 1.74).

Reading and converting units of measure

All numerical values in KnowRob can optionally be annotated with a unit of measure. To keep the system backwards compatible, values without annotation are interpreted to be given in the respective SI unit (e.g. meter, second). The full article including an explanation of the design choices and links to further information can be found here.